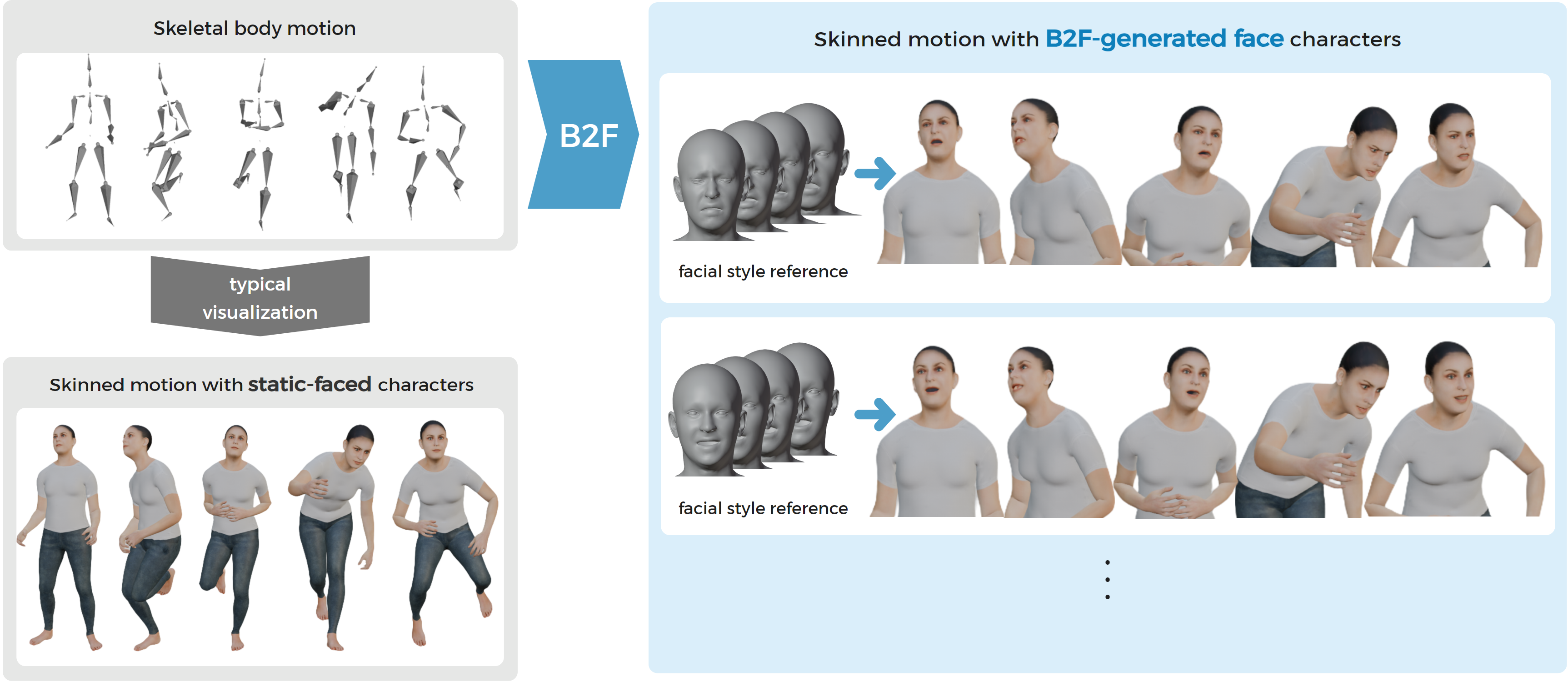

B2F: End-to-End Body-to-Face Motion Generation with Style Reference

B2F generates facial expressions that align with body motions, enhancing the cohesiveness of character animation. Conditioned on a style reference, it produces diverse and expressive facial motions.

Video

Abstract

Human motion naturally integrates body movements and facial expressions, forming a unified perception. If a virtual character’s facial expression does not align well with its body movements, it may weaken the perception of the character as a cohesive whole. Motivated by this, we propose B2F, a model that generates facial motions aligned with body movements. B2F takes a facial style reference as input, generating facial animations that reflect the provided style while maintaining consistency with the associated body motion. To achieve this, B2F learns a disentangled representation of content and style, using alignment and consistency-based objectives. We represent style using discrete latent codes learned via the Gumbel-Softmax trick, enabling diverse expression generation with a structured latent representation. B2F outputs facial motion in the FLAME format, making it compatible with SMPL-X characters, and supports ARKit-style avatars through a dedicated conversion module. Our evaluations show that B2F generates expressive and engaging facial animations that synchronize with body movements and style intent, while mitigating perceptual dissonance from mismatched cues, and generalizing across diverse characters and styles.

Paper

Publisher: page, paper

arXiv: page, paper

Presentation

Pacific Graphics 2025 Presentation Slides: pdf (4.1MB), pptx (170.9MB)